How To Import All Data From Salesforce To Hadoop

How To Import All Data From Salesforce To Hadoop

Salesforce2hadoop may not be the only way to import data from your Salesforce cloud, but it is the most comprehensive way. This enables you to pull all data and put it on your local file system. It is Avro. It is a powerful tool that will gather all your business data in one place. It uses your username/password combination to gain access to your Salesforce API. And that’s how it becomes easier to import all data from salesforce to hadoop.

What are its defining features?

Salesforce2hadoop has a few key features. Including –

- You can pick the types of records for importing.

- All data types depend on the Enterprise WSDL of your Salesforce CRM.

- It uses Avro to store all imported data. It only pulls those data that have undergone updates since your last data import.

- Each time you import data, salesforce2hadoop keeps a note.

- It works on any system that has Java 7 or a higher edition.

- It also works with newer APIs of Salesforce and the developer’s edition, SalesforceDx.

How to install it?

Github has some compiled binaries you can use for installing salesforce2hadoop. You can just download it, unzip it and get ready to roll.

If you want to build a salesforce2hadoop program from scratch, you will need Scala and SBT on your system.

It is a simplistic command line application. If you have Java 7 or higher, you can also check what options you have at hand for running the application. The command line application needs to read the Enterprise WSDL to understand the structure of all your Salesforce data. Salesforce2hadoop usually has only two commands for importing Salesforce data – init and update.

Sf2hadoop also needs a base Path where it can store all the data it imports. You need to provide a URI that Hadoop understands and utilizes. Right now, the application prefers an HDFS or local file system for data storage. It will store all data under the base path you provide. Each record type will have its directory.

Sf2hadoop keeps track of all records chronologically. This will enable you to switch to incremental imports after completing the first round of importing. Due to several restrictions of the existing Salesforce API, the record can only go back to 30 days at a time. If you are thinking of trying an incremental import over a long time, get ready for errors and incomplete data imports.

How to make the most out of salesforce2hadoop?

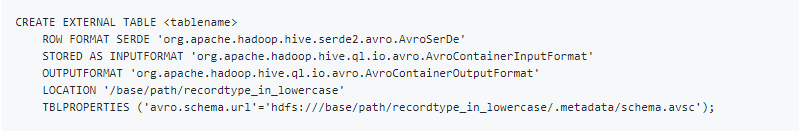

You can start by creating a Hive table supported by the data imported in Avro format. You can run modern Hive Shells, including –

You will also be able to see and access this table in Impala. You may need an added INVALIDATE METADATA to see the table instantly. Each time you conduct a data import, make sure you tell Impala about the available data by refreshing the table.

The introduction of new Salesforce versions has made marketing management and data integration much easier for big enterprises who have a terabyte of data lying around in Salesforce databases.

Author Bio

David Wicks is a data expert. He has been working with Hadoop ever since the dawn of big data. To find out how to manage big data better with Salesforce integration follows Flosum.com

Leave a Reply

You must be logged in to post a comment.